Sustainable AI

Enabling computationally efficient and environmentally sustainable frameworks for high-performance artificial intelligence (AI) and machine learning (ML).

MERL Researchers: Toshiaki Koike-Akino, Ye Wang, Pu Wang, Jing Liu, Matthew Brand, Kieran Parsons.

Overview

Artificial intelligence (AI), encompassing machine learning (ML) and deep learning (DL), has profoundly transformed various aspects of our lives, from everyday solutions to societal services and industrial evolutions. Particularly, the advent of Generative AI, such as GPT (generative pre-trained transformer) and diffusion-based models, has been revolutionizing a multitude of applications in recent years.

While these advanced AI systems have achieved remarkable performance, the computational complexity, power consumption, and storage requirements have been skyrocketing. For instance, modern large language models (LLMs) often require billions or even trillions of model parameters. This immense scale comes at a significant cost in terms of the high computing power needed for training and inference, which may lead to a substantial increase in carbon dioxide (CO2) emissions. Striking a balance between technological advancements and sustainable practices will be a key challenge for the AI community in the years to come.

MERL's Initiatives: A Greener Path for AI

Mitsubishi Electric Research Laboratories (MERL) is striving to develop sustainable AI solutions through our Green AI initiative. As the demand for computational resources in modern AI continues to grow, we recognize the urgent need to explore innovative techniques that improve efficiency across all aspects of AI development and deployment.

Our research focuses on several key areas:

- Parameter-Efficient Fine-Tuning (PEFT): We are developing methods to adapt pre-trained models to new tasks with minimal additional parameters, significantly reducing computational requirements while maintaining performance.

- Quantization and Pruning: By reducing the precision of model weights and removing unnecessary connections, we can dramatically decrease model size and inference time without sacrificing accuracy.

- Knowledge Distillation: Our techniques transfer knowledge from large, complex models to smaller, more efficient ones, enabling powerful AI capabilities on resource-constrained devices.

- Natural Computing: We are exploring new computing paradigms based on natural systems, potentially revolutionizing how AI algorithms are designed and deployed.

Through these efforts, MERL aims to create AI systems that are not only powerful but also environmentally responsible. By pushing the boundaries of efficient AI, we are working towards a future where advanced AI and environmental sustainability go hand in hand. On this highlight page, we provide a brief introduction to each of our selected papers on green AI:

- Low-dimensional adaptation (LoDA): MERL TR2023-150 (NeurIPS 2023)

- Efficient privacy: MERL TR2024-104 (ICML 2024), arXiv

- SuperLoRA: MERL TR2024-062 (CVPR 2024), MERL TR2024-156 (BMVC 2024), arXiv, CVPR ECV24

- Quantum-PEFT: MERL TR2024-101 (ICML 2024) ICML 2024 Workshop ES-FoMo-II

- Multiplier-free AI: MERL TR2021-110 (ECOC 2021), MERL TR2023-096 (MWSCAS 2023)

- Hardware-efficient quantization (HEQ): MERL TR2024-105 (ICML 2024), ICML 2024 Workshop ES-FoMo-II

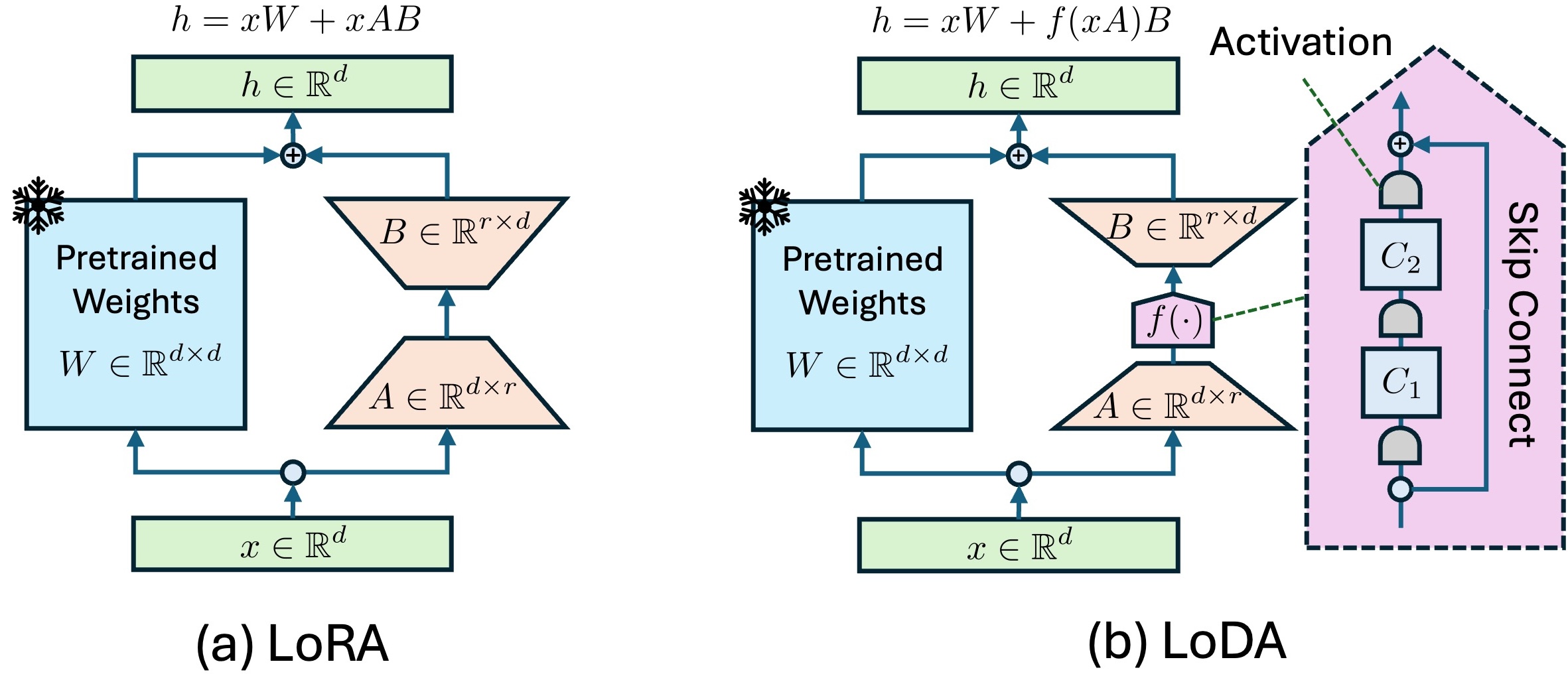

LoDA: Low-Dimensional Adaptation

While low-rank adaptation (LoRA) has shown impressive results as a promising PEFT method for fine-tuning large models with fewer parameters, it is fundamentally limited to linear subspace adaptations. We introduced a new approach called LoDA (low-dimensional adaptation), taking the next step by generalizing LoRA to low-dimensional, non-linear adaptations. Key features of LoDA include:

- Non-linear Adaptations: LoDA introduces trainable non-linear functions within the LoRA architecture, allowing for more expressive and flexible model updates.

- Backwards Compatible: LoDA fully encompasses LoRA as a special case, ensuring high compatibility with existing LoRA framework.

- Parameter Efficiency: Despite its increased expressiveness, LoDA maintains a similar number of tunable parameters as LoRA through narrow multi-layer perceptron and parameter sharing.

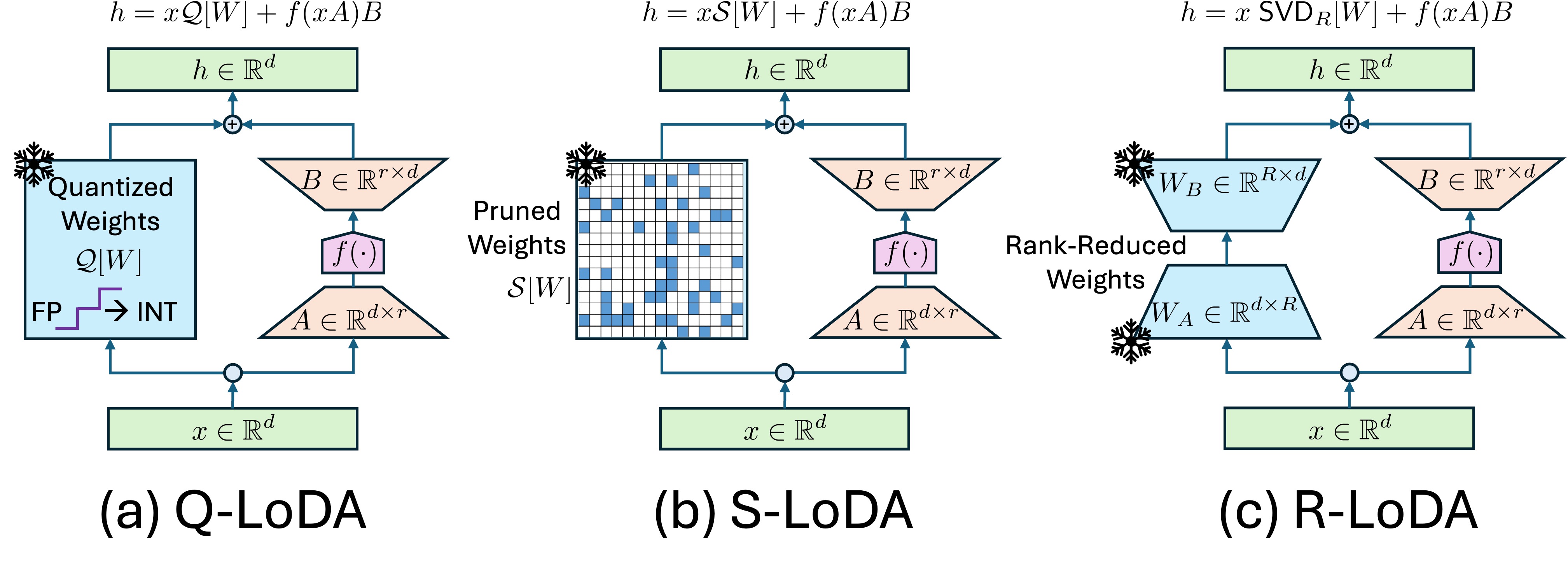

To improve computational efficiency, we further integrated model distillation with LoDA:

- Q-LoDA: Quantizes pretrained weights before LoDA fine-tuning

- S-LoDA: Sparsifies pretrained weights before LoDA fine-tuning

- R-LoDA: Performs rank-reduced approximation of pretrained weights before LoDA fine-tuning

These LoDA variants significantly reduce computational costs for both training and inference while maintaining performance. Our evaluations on natural language generation tasks demonstrate that LoDA variants outperform their LoRA counterparts in capturing task-specific knowledge. For more details, please refer to our technical report: TR2023-150 LoDA: Low-Dimensional Adaptation of Large Language Models. A recorded presentation of our work is available here: NeuriIPS Workshop: ENLSP-III.

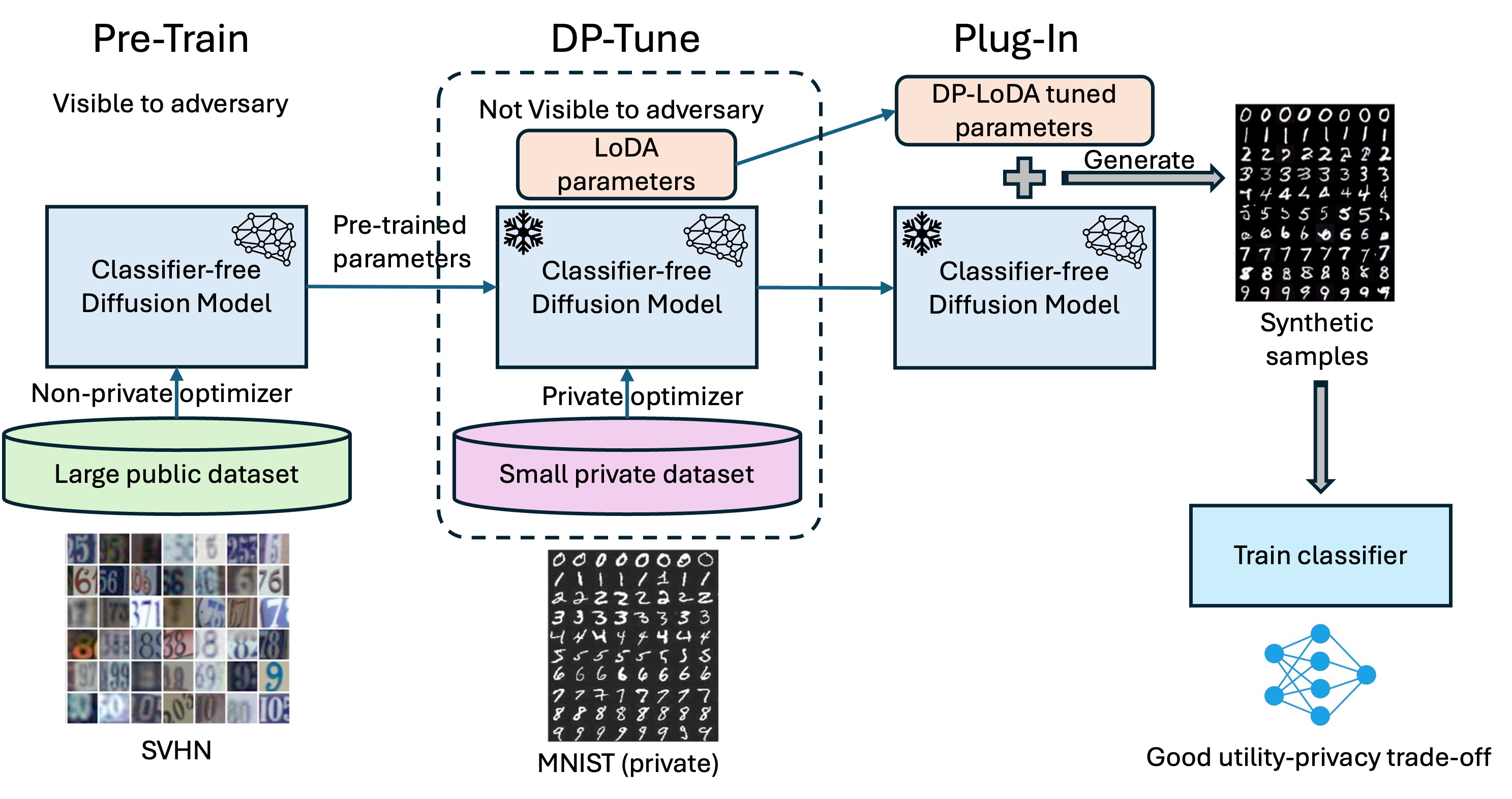

LoDA for Efficient Privacy-Preserving AI

We proposed an efficient and privacy-preserving AI framework using LoDA with differential privacy. Our work addresses a critical challenge in the field of generative AI, particularly with diffusion models (DMs).

Diffusion models have emerged as a powerful tool for generating high-quality synthetic samples. Recent studies have shown that DMs pre-trained on public data and fine-tuned with differential privacy on private data can produce synthetic samples suitable for training downstream classifiers. This approach offers an excellent balance between privacy protection and utility. However, the conventional method of fully fine-tuning large DMs using differentially private stochastic gradient descent (DP-SGD) is extremely resource-intensive, requiring significant memory and computational power.

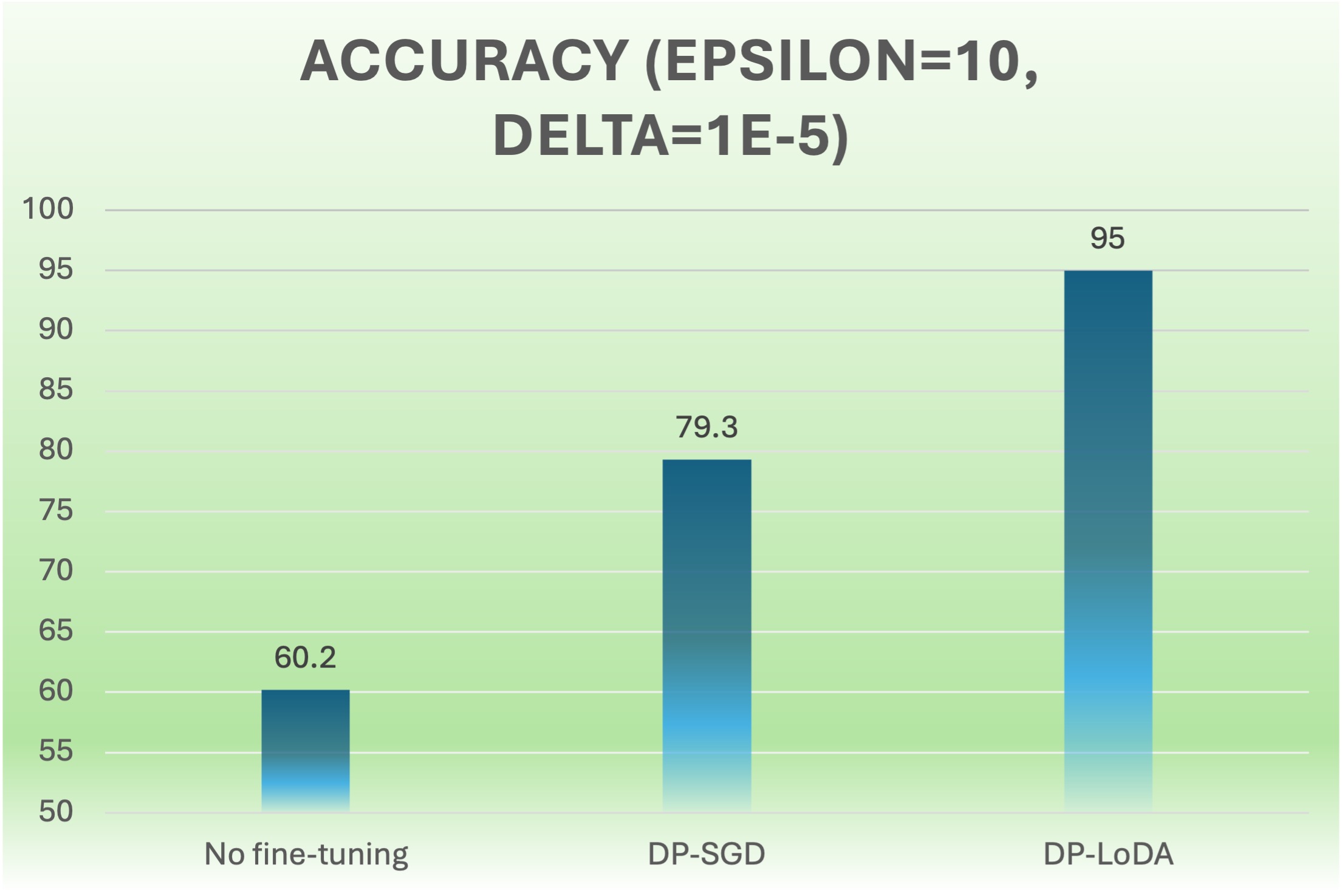

To address this challenge, we introduce DP-LoDA, a framework that integrates LoDA with differential privacy (DP). This approach allows for efficient fine-tuning of DMs while maintaining strong privacy guarantees. Key benefits of DP-LoDA include:

- Efficiency: DP-LoDA significantly reduces the computational resources required for fine-tuning large diffusion models.

- Privacy Protection: Our method ensures guaranteed privacy protection for the sensitive data used in fine-tuning.

- Utility Preservation: Despite the privacy constraints, DP-LoDA enables the generation of high-quality synthetic samples that are effective for training downstream classifiers.

Through benchmark datasets including MNIST and CIFAR-10, our results demonstrate that our DP-LoDA generates useful synthetic samples for training downstream classifiers while maintaining strong privacy guarantees. For more details, please refer to our technical report: TR2024-104 Efficient Differentially Private Fine-Tuning of Diffusion Models. The corresponding preprint is found on arXiv.

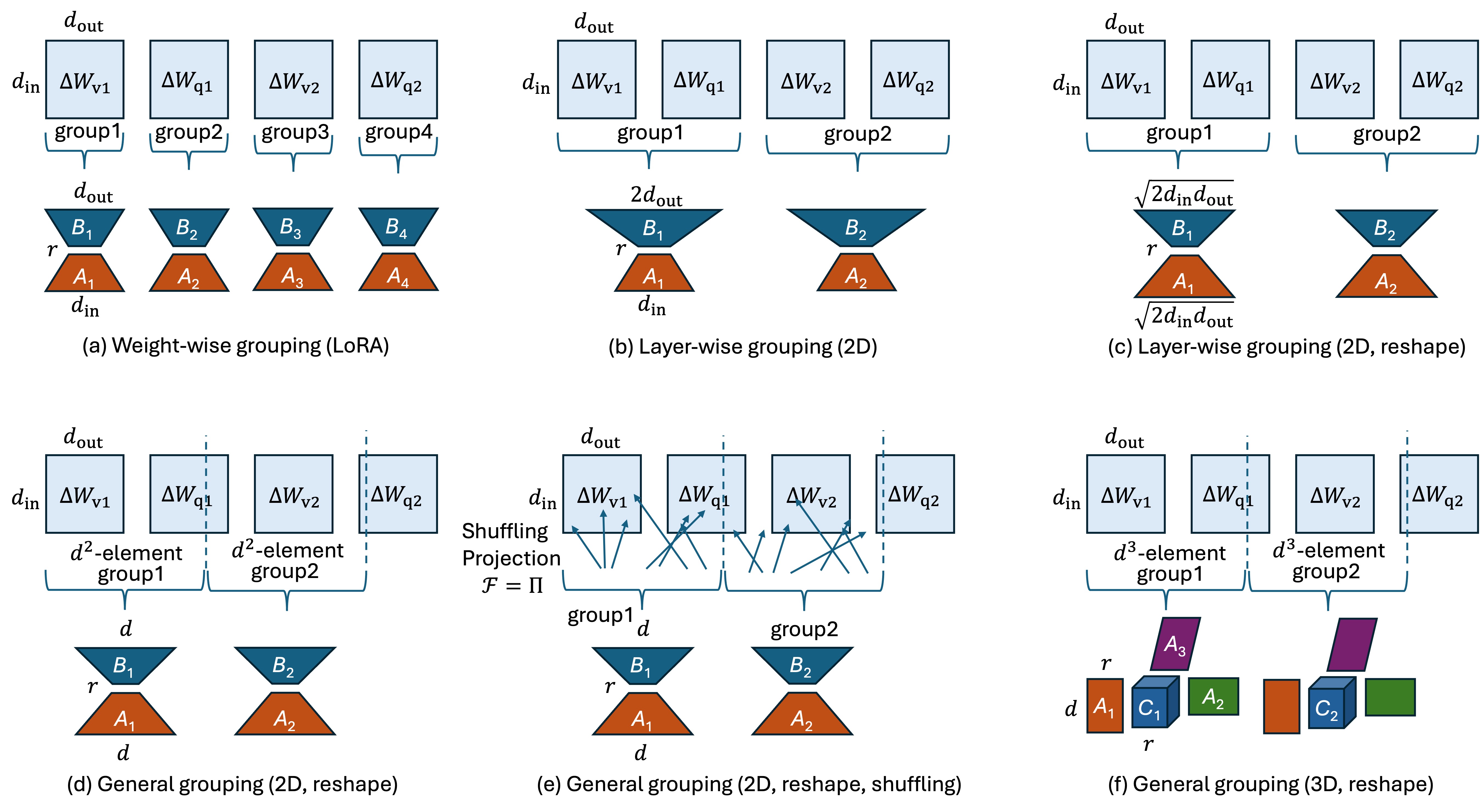

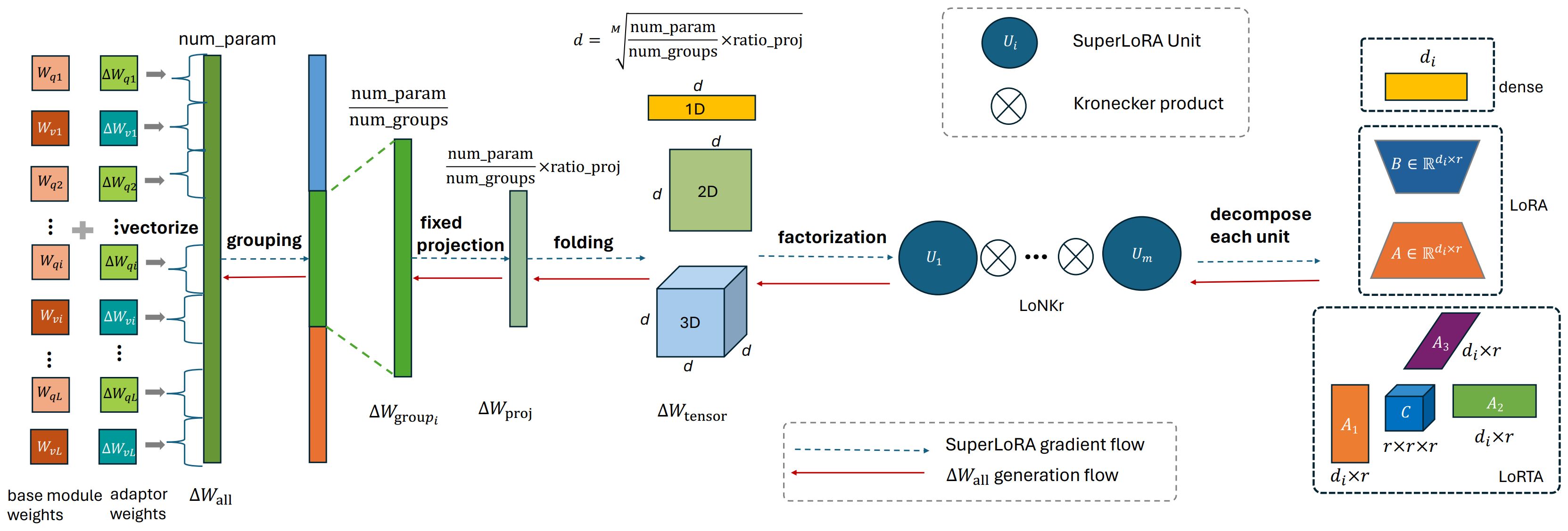

SuperLoRA: Low-Rank Tensor Adaptation

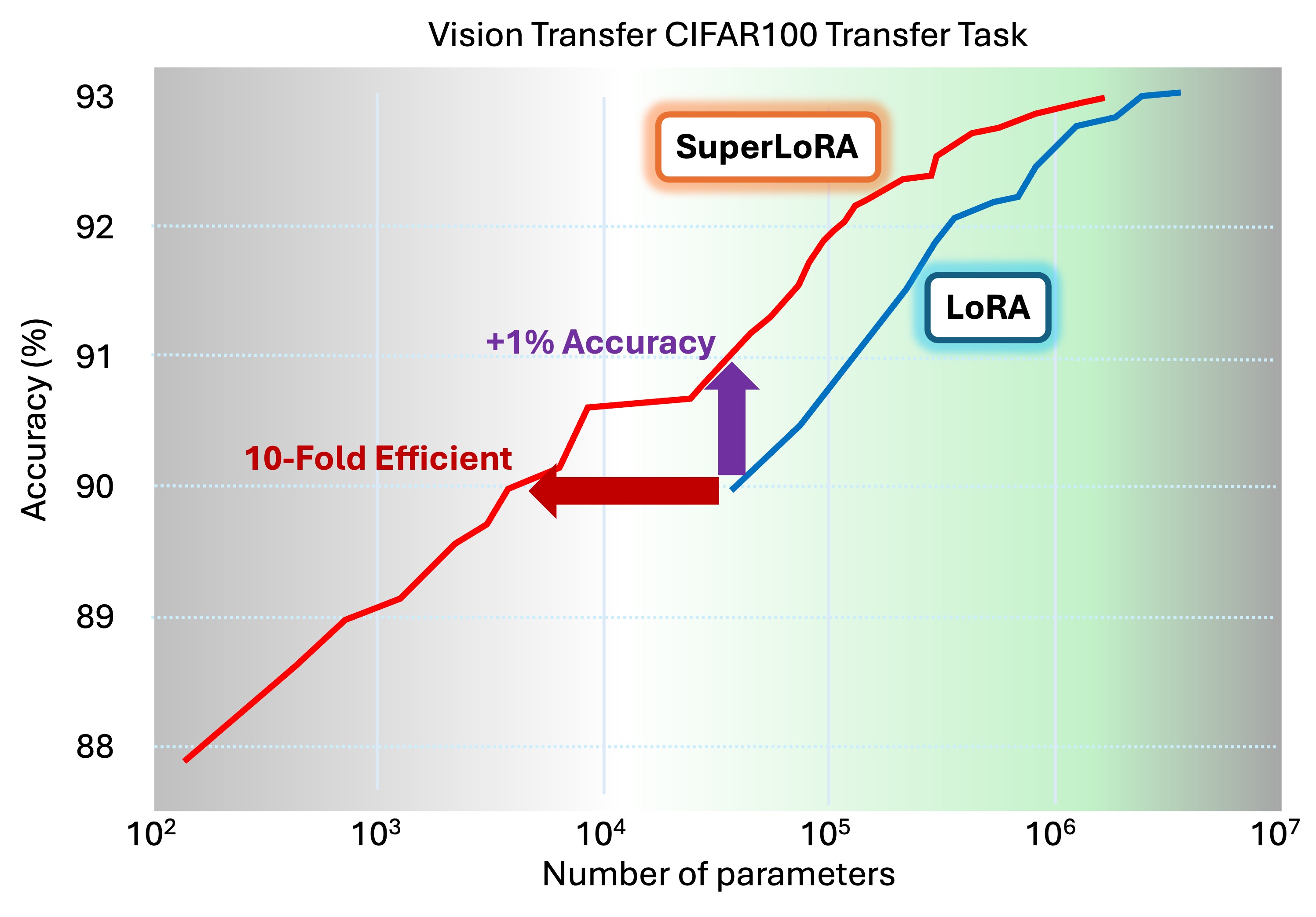

SuperLoRA is a new framework that unifies and extends LoRA variants, offering high flexibility and efficiency in transfer learning tasks. By introducing innovative options like grouping, folding, shuffling, projection, and tensor decomposition, SuperLoRA achieves remarkable parameter efficiency gains of up to 10-fold compared to conventional methods.

Joint Weight Updates: Unlike traditional LoRA approaches that update weights individually, SuperLoRA enables simultaneous updates of multiple weight matrices. This joint adaptation enables more efficient and effective fine-tuning.

Flexible Tensor Decomposition: SuperLoRA leverages tensor-rank decomposition techniques to optimize parameter efficiency. By stacking, reshaping, and folding weight matrices, it creates unprecedented opportunities for more efficient low-rank adaptations.

- Grouping: Flexible chunking for joint weight updates

- Shuffling: Element rearrangement before tensor rank decomposition

- Fastfood Projection: Compression technique enabling super-fast computation

- Nonlinear Projection: Generalizing LoDA within the SuperLoRA framework

- Folding: Forming arbitrary dimensional tensors

- Factorization: Kronecker factoring to generalize LoKr

- Tucker Decomposition: High-order tensor rank decomposition

Performance Gains: SuperLoRA has demonstrated significant improvements over existing LoRA variants in benchmark datasets. For vision tasks, it achieved approximately 10 times higher efficiency while also improving accuracy by 1% in CIFAR100 transfer learning.

For details on vision tasks with diffusion models and vision transformers (ViT), please refer to our technical report: TR2024-062 SuperLoRA: Parameter-Efficient Unified Adaptation for Large Vision Models; and preprint on arXiv. For additional analysis of LLM natural language generation tasks, please see another technical report: TR2024-156 SuperLoRA: Parameter-Efficient Unified Adaptation of Large Foundation Models.

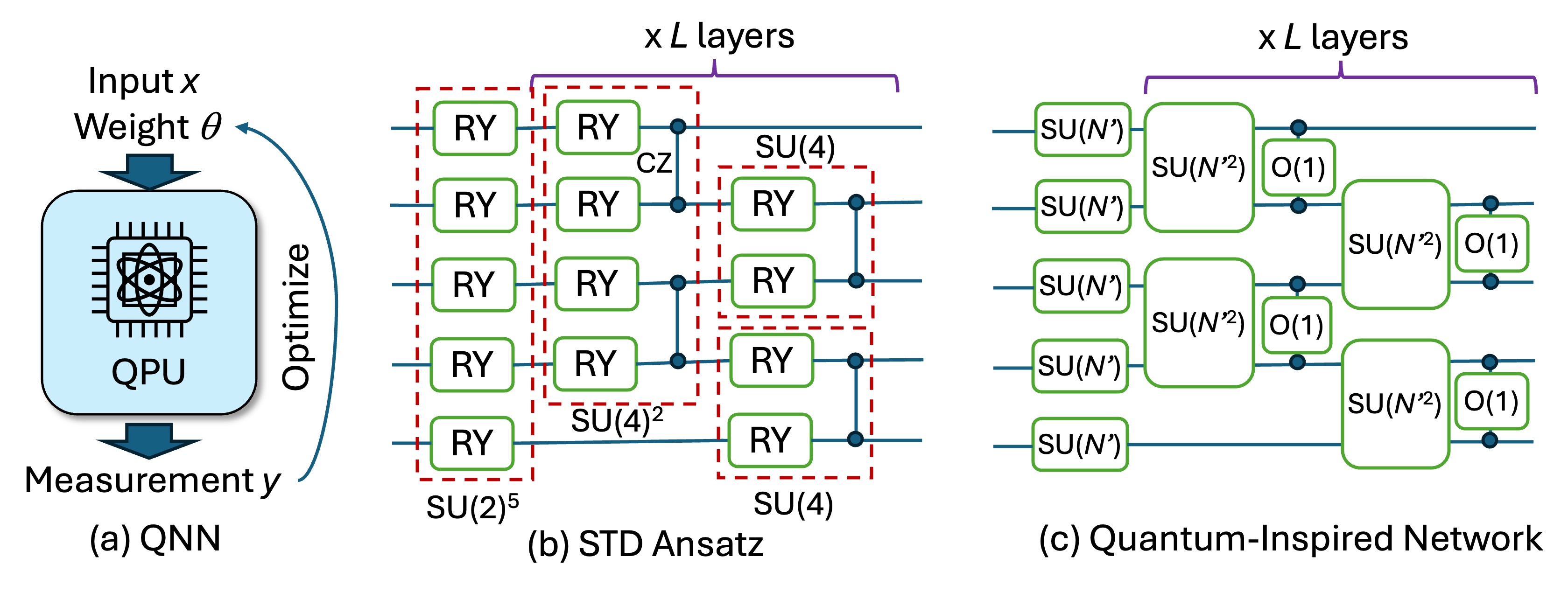

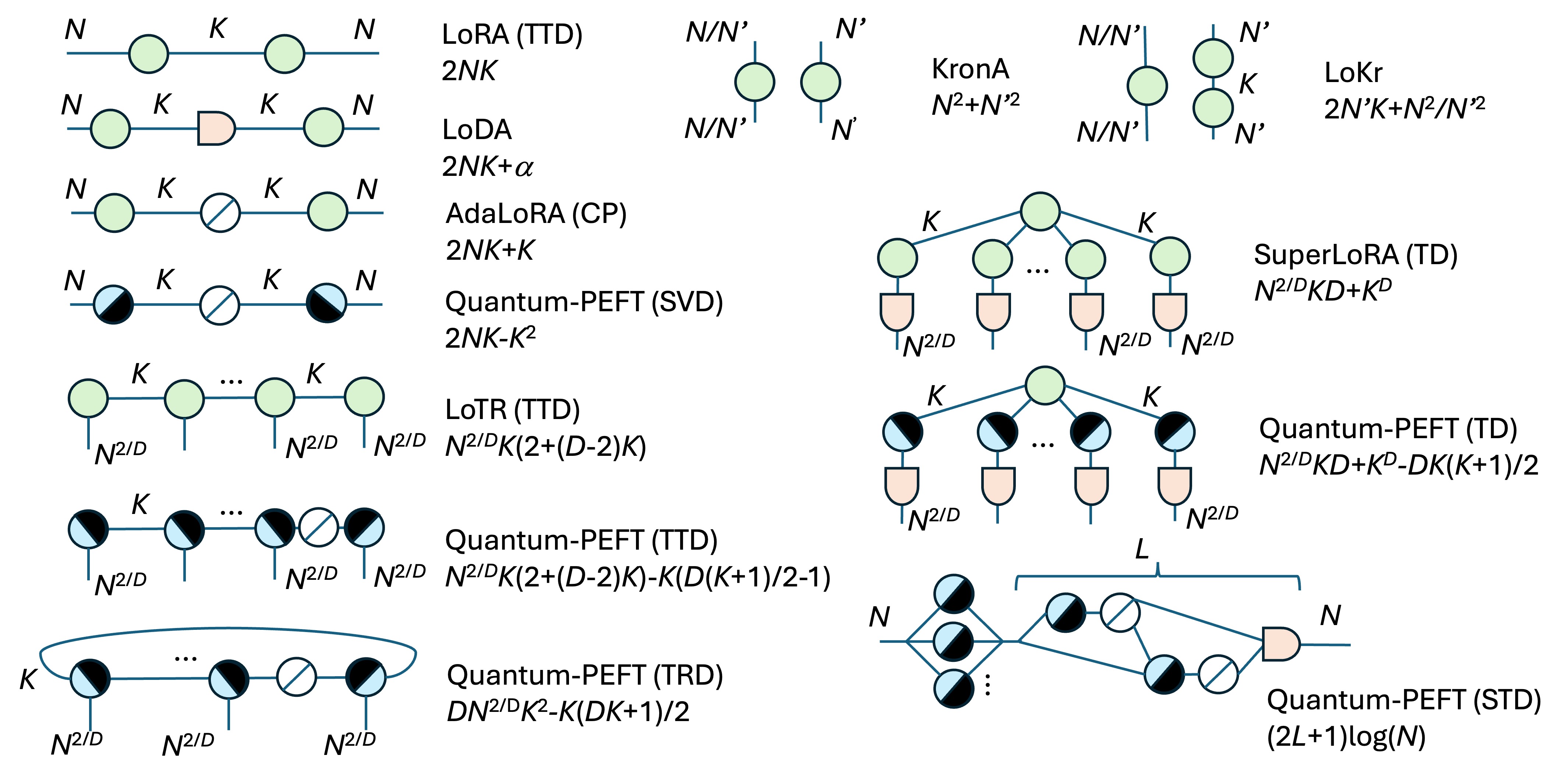

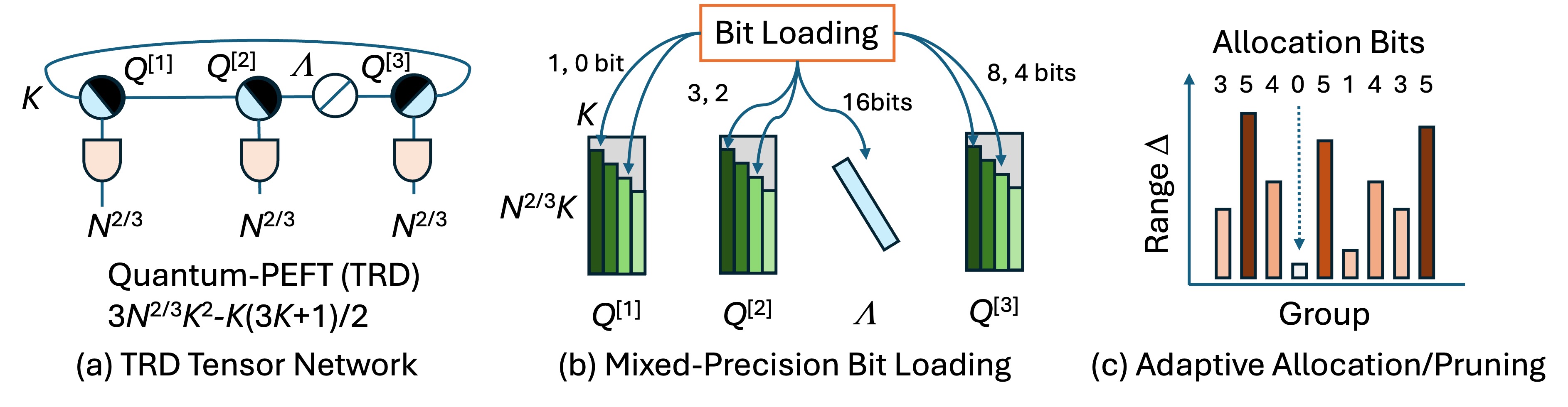

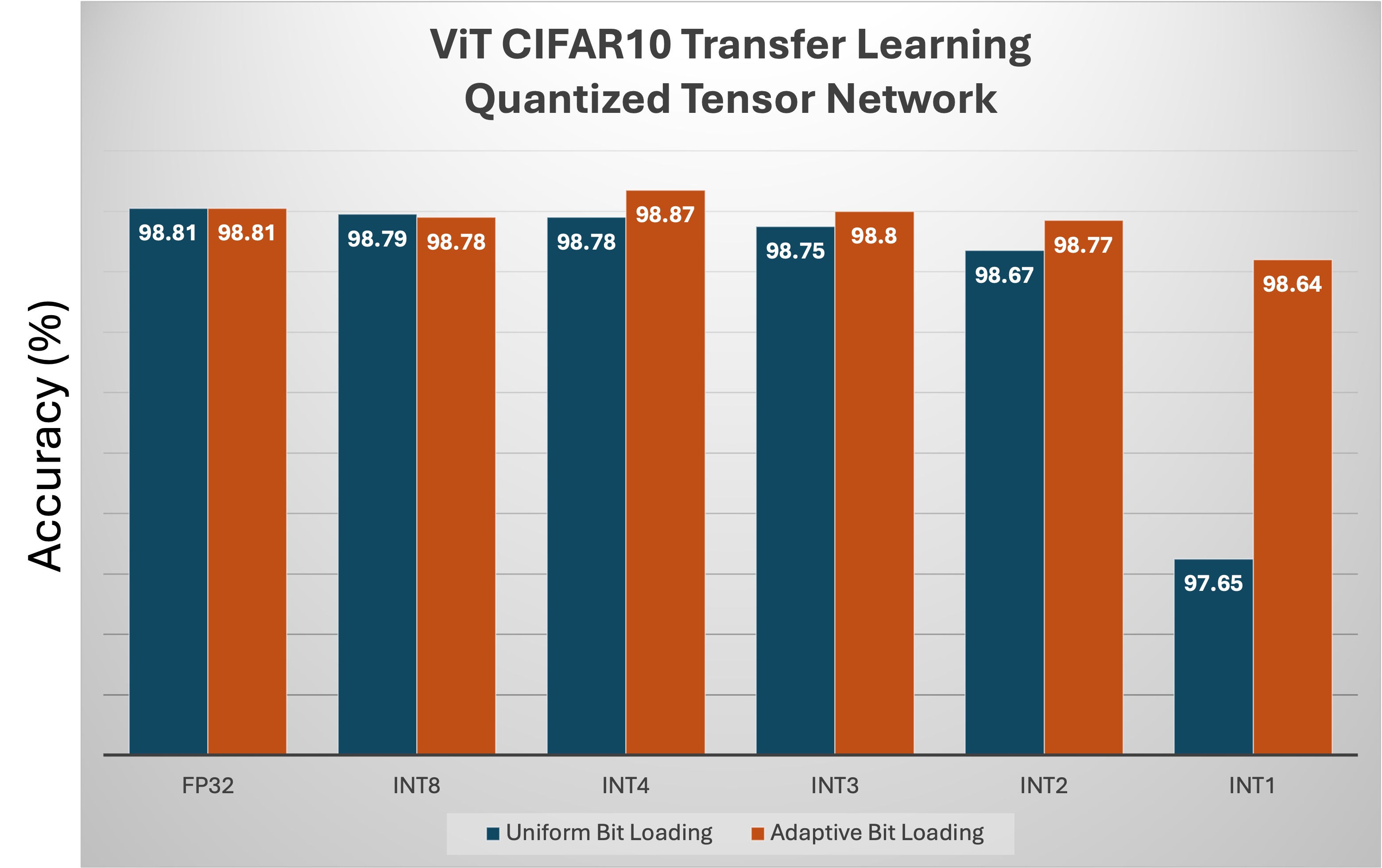

Quantum-PEFT: Quantum-Inspired Tensor-Network Adaptation

Our research introduces Quantum-PEFT, an innovative framework that leverages quantum tensor networks to achieve ultra parameter-efficient fine-tuning. This method surpasses traditional PEFT techniques by utilizing concepts from quantum machine learning (QML).

Quantum-PEFT offers several significant advantages:

- Full-rank yet parameter-efficient quantum unitary parameterization with alternating entanglement

- Pauli parameterization resulting in logarithmic growth of trainable parameters

- Significantly fewer trainable parameters compared to lowest-rank LoRA while maintaining competitive performance

- Quantum-inspired modules generalizing 1-qubit gates, 2-qubit gates, and measurement

- Operability with mixed-precision tensor networks

- Compatibility of running on both classical and quantum computers

We found that most LoRA variants can be formulated using tensor networks, which provide a way to represent and manipulate multi-dimensional data arrays by factorizing them into networks of lower-dimensional tensors. For instance, Tucker decomposition used in SuperLoRA is one such tensor network, and low-rank decomposition used in LoRA is a 2D tensor-train decomposition (TTD), also known as matrix product state (MPS). MPS is a widely used quantum tensor network in QML. Quantum-PEFT builds upon recent advancements in QML, which have demonstrated competitive performance and exponentially rich expressivity suitable for PEFT applications.

Quantum-PEFT has demonstrated substantial advantages up to 50 fold in parameter efficiency across various transfer learning benchmarks in both language and vision domains. For more details, please refer to our technical report: TR2024-101 Quantum-PEFT: Ultra Parameter-Efficient Fine-Tuning. It was presented here ICML workshop. This innovative approach opens up new possibilities for more efficient and effective AI through the interaction with natural computing paradigms. To learn more about our work on natural computing for green AI, please check another highlight page on our quantum AI technology.

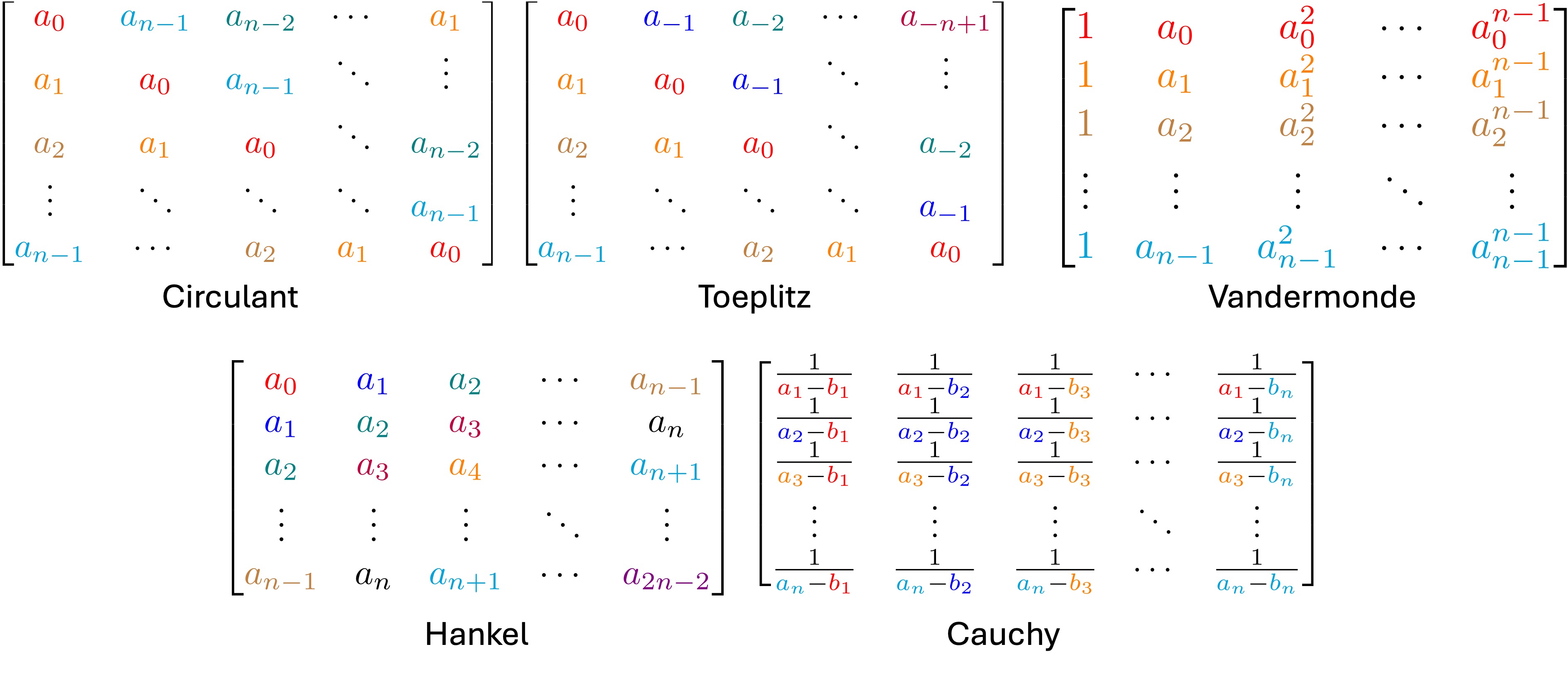

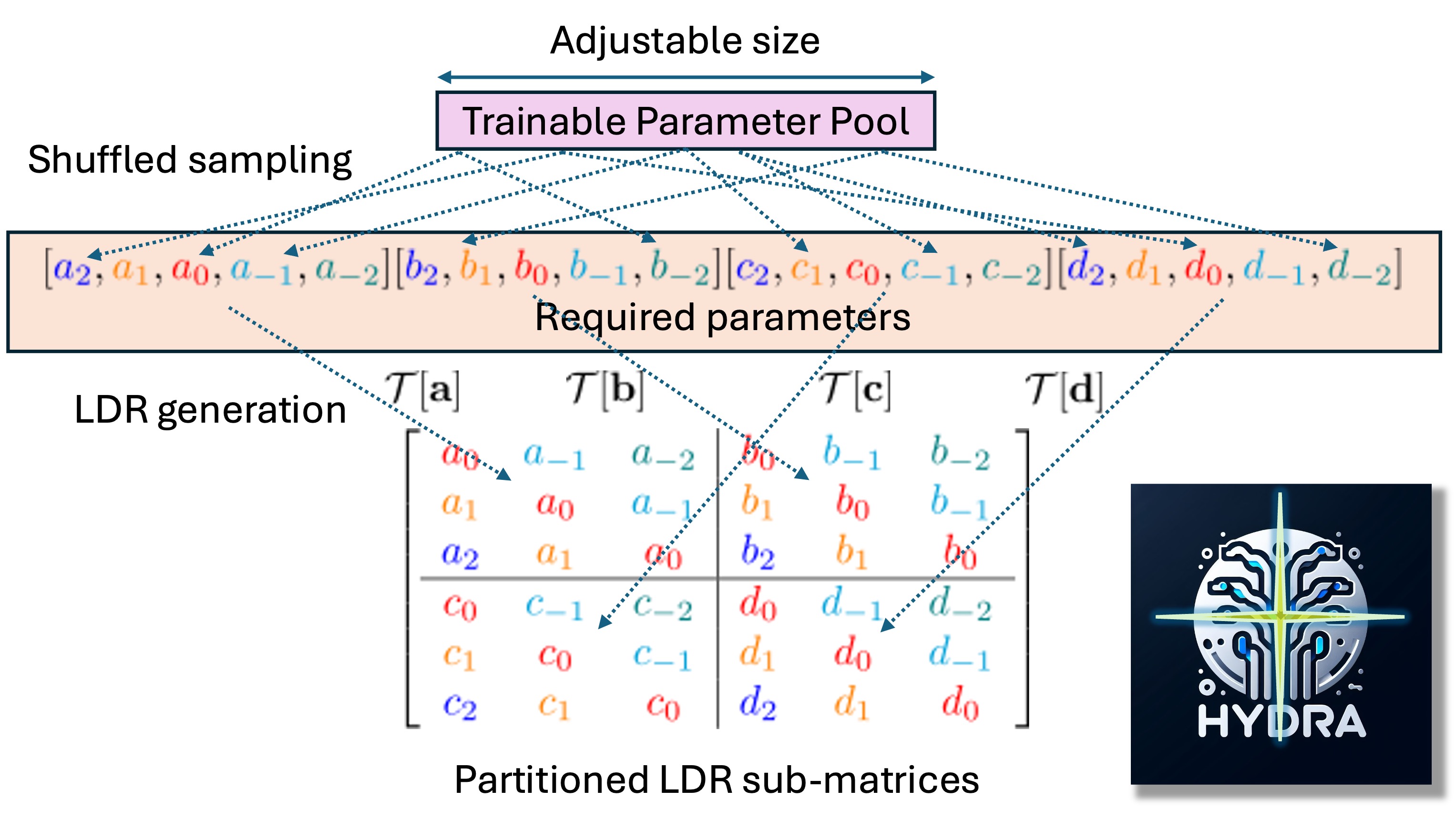

HyDRA: Hypernet Low-Displacement Rank Adaptation

Low-rank structures used in LoRA is a special case in the low-displacement rank (LDR) matrix family, which includes other rank-unrestricted structured matrices such as Circulant, Toeplitz, Vandermonde, Hankel, and Cauchy matrix. LDR matrices offer two noticeable benefits:

- Parameter efficiency: An LDR matrix of size (N, N) can be constructed using only O[N] parameters

- Computational efficiency: LDR matrices enable superfast matrix-vector multiplication in sub-quadratic O[N polylog(N)] cost

We propose a novel PEFT strategy that leverages the power of LDR matrices and hyper networks to enhance model adaptation. Our method, hyper low-displacement rank adaptation (HyDRA), offers high flexibility for choosing the size of a pool of trainable parameters, while not being restricted by the displacement rank. Key features of HyDRA include:

- Structured matrix: HyDRA uses LDR matrices, which offer efficient parameterization and superfast computing capabilities

- Hyper network: Parameters are sampled from a limited trainable parameter pool using a hyper network framework

- Block partitioning: LDR matrices are partitioned into blocks, increasing their complexity and expressiveness

- Flexible parameter pool: The number of trainable parameters can be adjusted independently of displacement rank and matrix size

- Partially fixed parameters: A fraction of the parameter pool can be random but fixed, further improving the efficiency

Our experiments demonstrate that the HyDRA can boost the classification accuracy by up to 3.4% and achieve two-fold improvement in parameter efficiency on an image benchmark compared with other PEFT baselines. For more details, please refer to our technical report: TR2024-157 Slaying the HyDRA: Parameter-Efficient Hyper Networks with Low-Displacement Rank Adaptation.

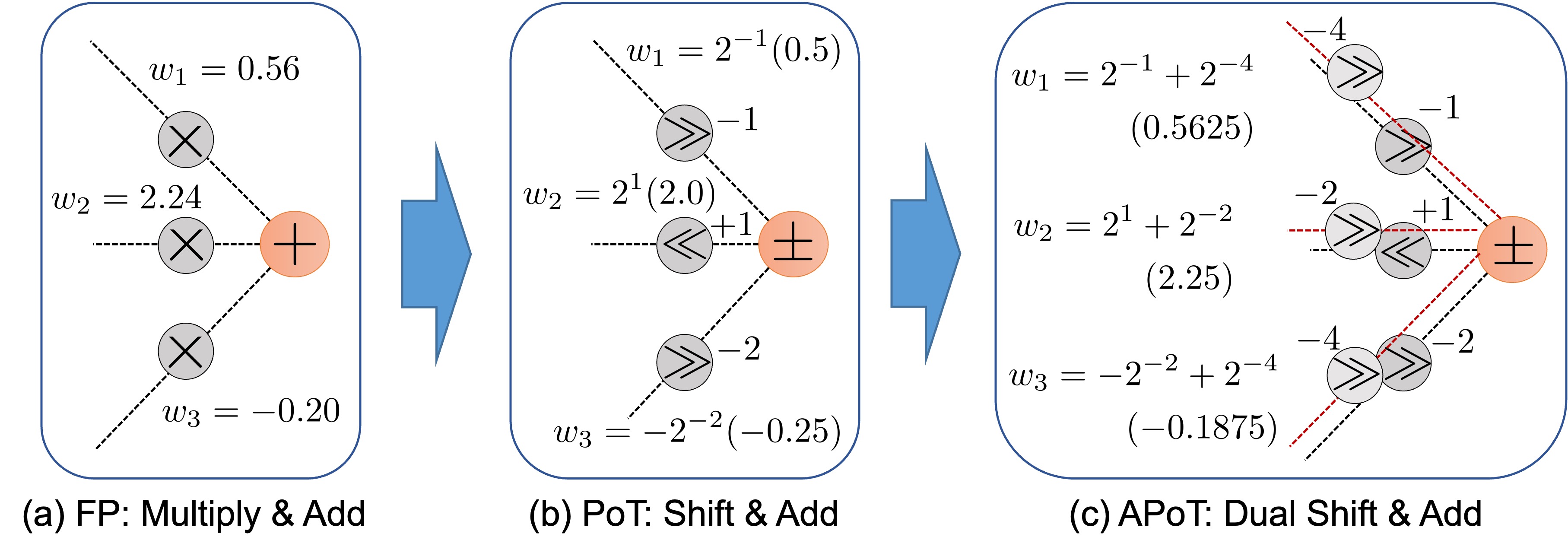

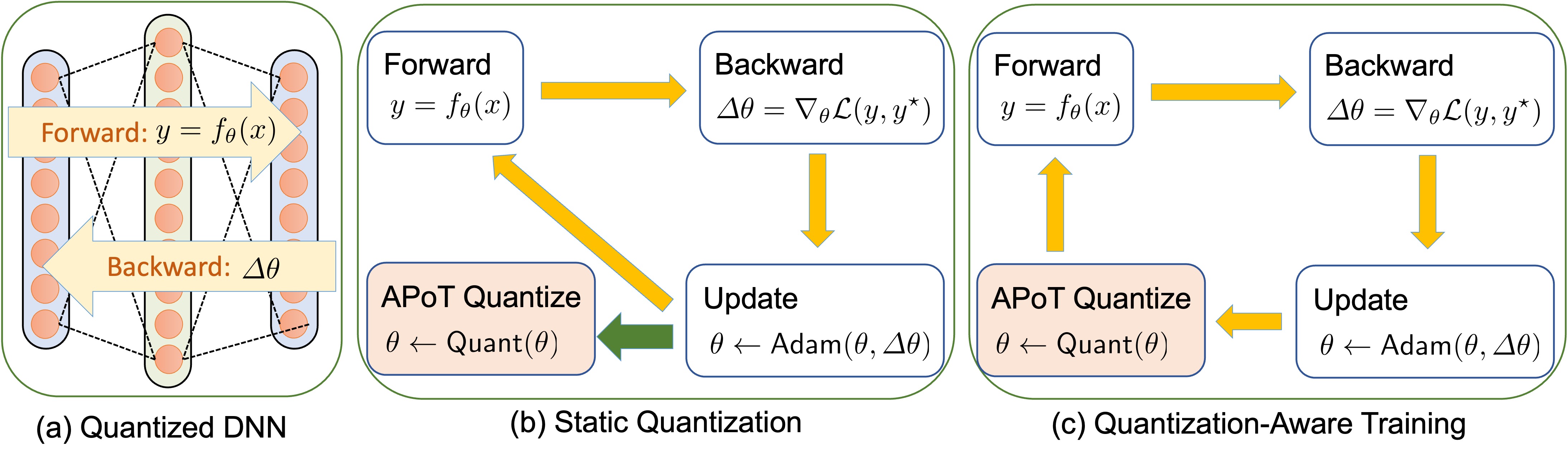

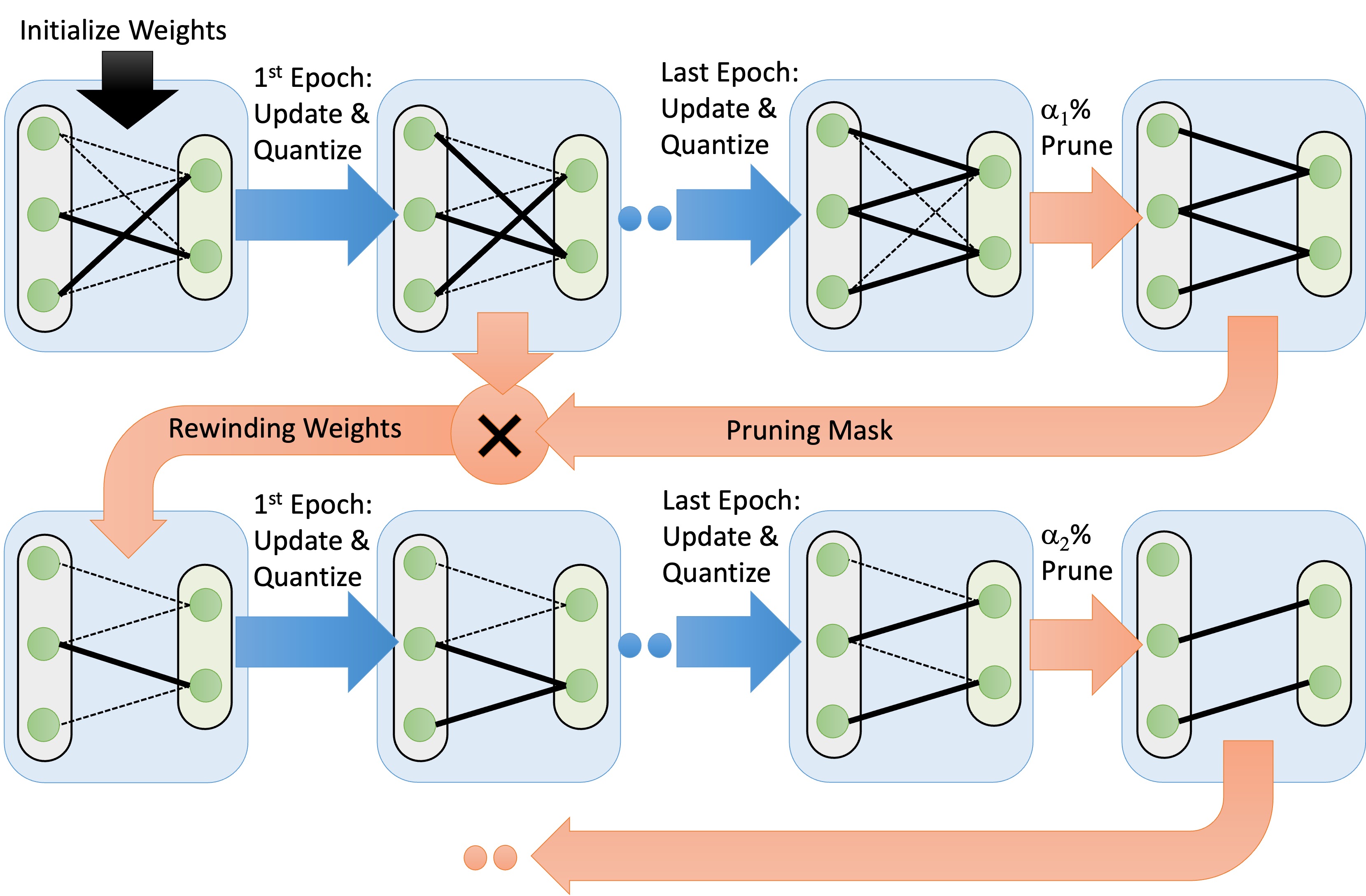

Zero-Multiplier AI: Quantization and Pruning

We developed a framework to create highly efficient AI models that eliminate the need for multiplication operations entirely. Our approach combines three key techniques:

- Additive Powers-of-Two (APoT) Quantization: This method allows us to represent weights using only powers of 2, enabling multiplication-free computations.

- Quantization-Aware Training (QAT): By incorporating quantization effects during the training process, we maintain model accuracy while using low-precision representations.

- Progressive Lottery Ticket Hypothesis (LTH) Pruning: This iterative pruning technique allows us to significantly reduce model size while preserving performance.

Progressive LTH pruning iteratively removes unnecessary connections, dramatically reducing model size by up to 1/100 fold for a regression task. For details, please check out our technical report: TR2021-110 Zero-Multiplier Sparse DNN Equalization for Fiber-Optic QAM Systems with Probabilistic Amplitude Shaping.

Furthermore, we also tackle the challenge of deploying efficient AI models on resource-constrained edge devices in another technical report: TR2023-096 Joint Software-Hardware Design for Green AI. We have developed AutoHLS, a comprehensive framework that optimizes both software and hardware aspects of AI implementation, resulting in significant improvements in energy efficiency and area utilization. Key features of the AutoHLS framework include:

- Holistic Optimization: Our approach combines neural architecture search (NAS) with hardware-level FPGA implementation, ensuring efficiency at every level.

- Sparse Quantized AI: We leverage sparse and quantized AI models with APoT and LTH to reduce computational requirements.

- Hardware Implementation: Our framework targets Field-Programmable Gate Array (FPGA) platforms, enabling customized hardware acceleration.

When applied to a real-time AI model, our method achieved:

- 2x Power Reduction: Halving the energy consumption compared to the baseline implementation.

- 40x Area Reduction: Dramatically shrinking the hardware footprint required for the AI model.

These improvements are particularly crucial for Internet of Things (IoT) and edge computing applications, where power and space constraints are significant challenges.

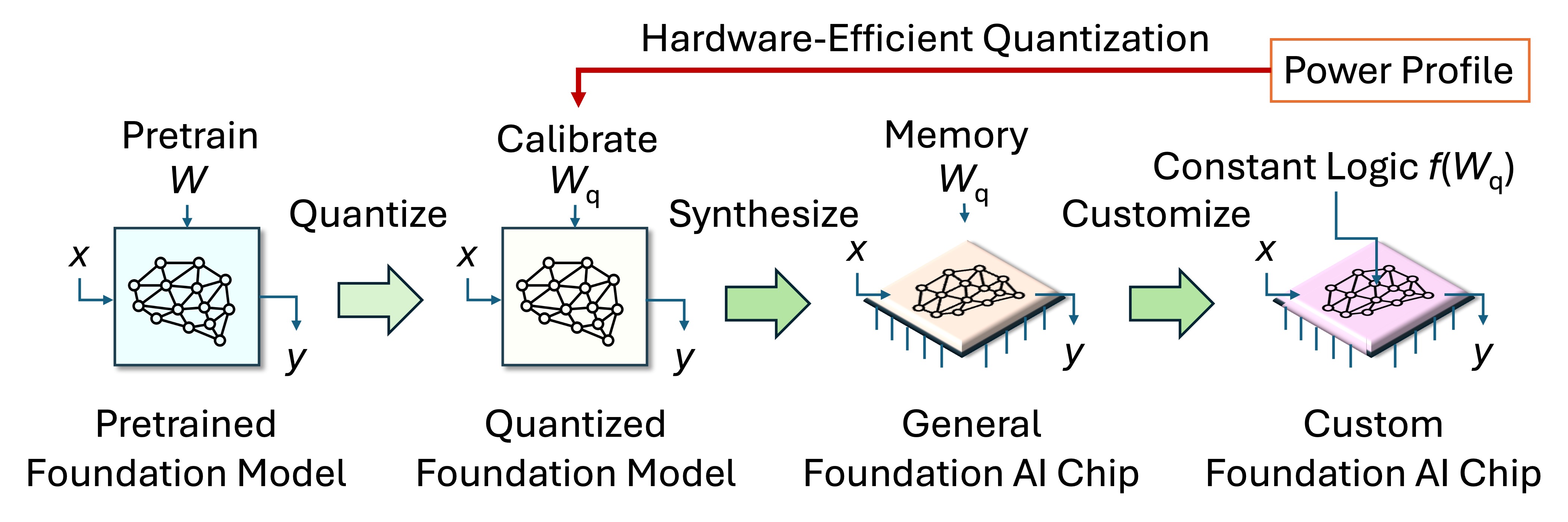

HEQ: Hardware-Efficient Quantization

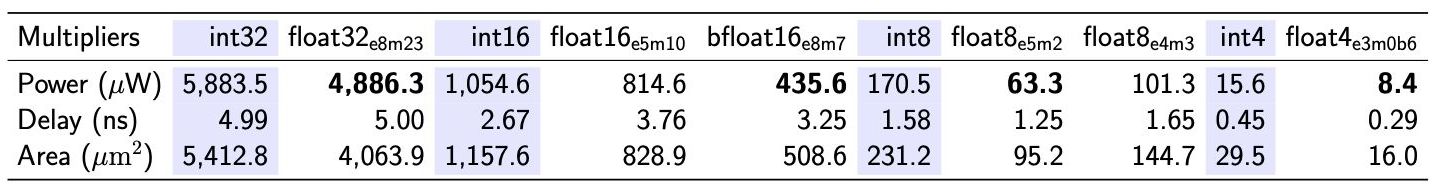

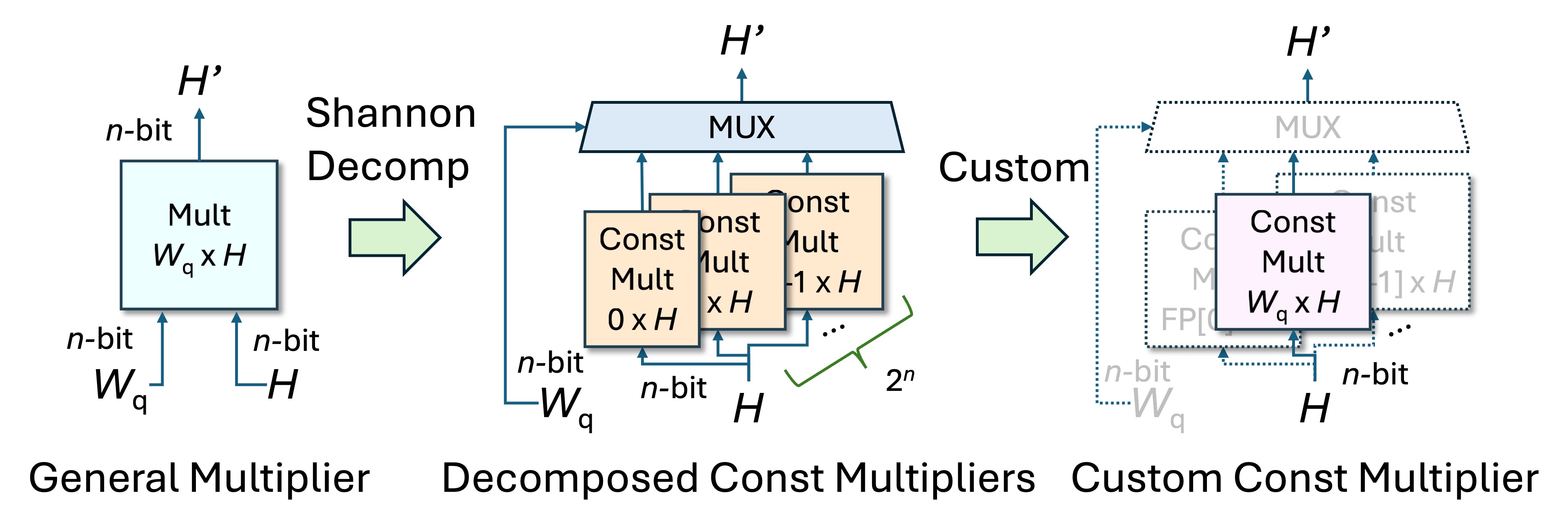

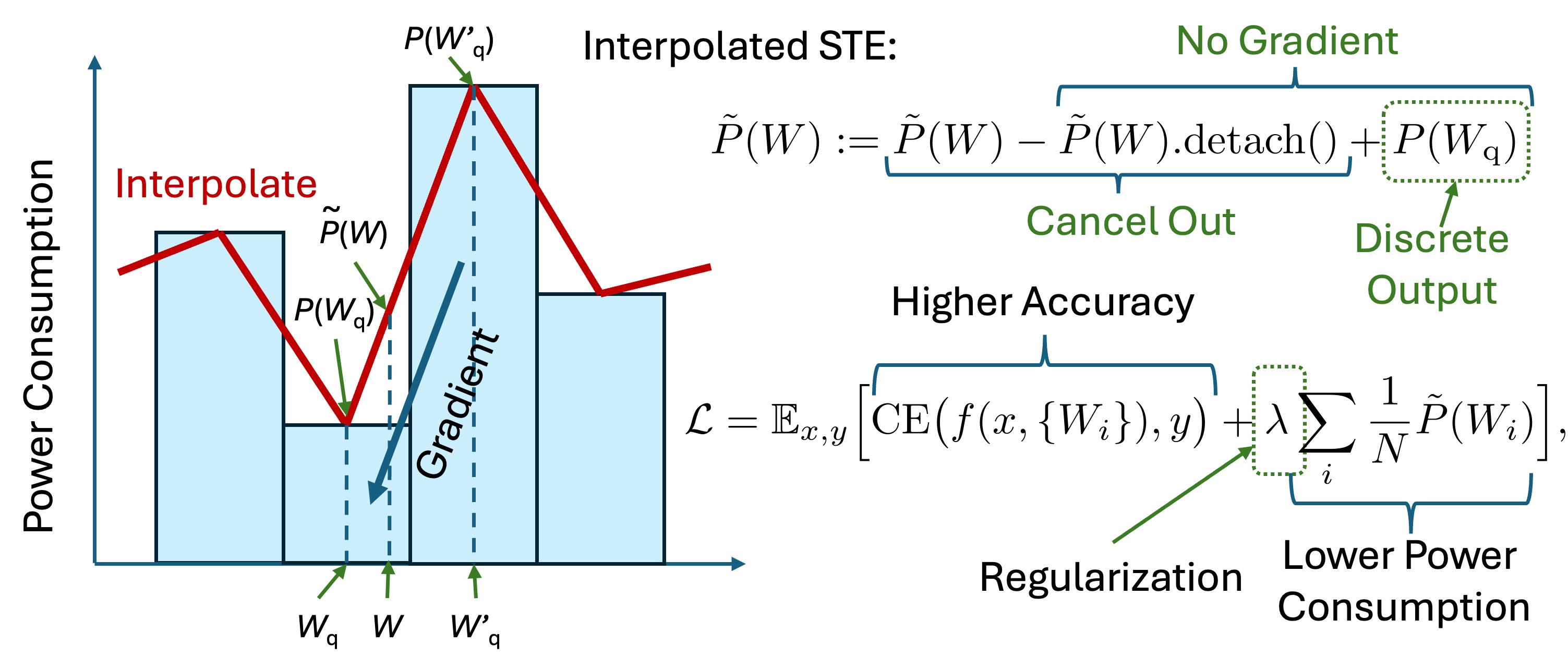

We propose an innovative hardware-efficient quantization (HEQ) method for creating energy-efficient full-custom foundation AI models. HEQ takes a novel approach by jointly optimizing multiplier hardware and weight quantization to minimize overall power consumption.

Our research reveals that the common assumption of integer quantization being more hardware-friendly than floating-point is often incorrect. Using a 45nm CMOS standard cell library, we found bfloat16 multipliers to be 2x more energy efficient than int16 multipliers.

Recognizing that the custom foundation AI models have fixed weights once deployed, FP multipliers can be simplified to custom constant multipliers through Shannon decomposition. Noticing that the energy and area footprints for such custom multipliers depend on weight values, we quantize the AI weights such that the total power consumption and inference performance are optimized.

We introduced a piece-wise linear interpolation with straight-through estimation (STE) to make the discrete hardware profile differentiable. With a regularized loss function, we can optimize total power consumption and inference performance jointly.

By leveraging the power profile of custom multipliers, HEQ achieves:

- Up to 4000x power reduction compared to full-precision floating point models

- Up to 0.5% improvement in classification accuracy due to regularization gain

For more details, please refer to our technical report: TR2024-105 Hardware-Efficient Quantization for Green Custom Foundation Models. This work was presented here ICML workshop.

MERL News & Events

-

NEWS MERL Papers and Workshops at CVPR 2024 Date: June 17, 2024 - June 21, 2024

Where: Seattle, WA

MERL Contacts: Petros T. Boufounos; Moitreya Chatterjee; Anoop Cherian; Michael J. Jones; Toshiaki Koike-Akino; Jonathan Le Roux; Suhas Lohit; Tim K. Marks; Pedro Miraldo; Jing Liu; Kuan-Chuan Peng; Pu (Perry) Wang; Ye Wang; Matthew Brand

Research Areas: Artificial Intelligence, Computational Sensing, Computer Vision, Machine Learning, Speech & AudioBrief- MERL researchers are presenting 5 conference papers, 3 workshop papers, and are co-organizing two workshops at the CVPR 2024 conference, which will be held in Seattle, June 17-21. CVPR is one of the most prestigious and competitive international conferences in computer vision. Details of MERL contributions are provided below.

CVPR Conference Papers:

1. "TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models" by H. Ni, B. Egger, S. Lohit, A. Cherian, Y. Wang, T. Koike-Akino, S. X. Huang, and T. K. Marks

This work enables a pretrained text-to-video (T2V) diffusion model to be additionally conditioned on an input image (first video frame), yielding a text+image to video (TI2V) model. Other than using the pretrained T2V model, our method requires no ("zero") training or fine-tuning. The paper uses a "repeat-and-slide" method and diffusion resampling to synthesize videos from a given starting image and text describing the video content.

Paper: https://www.merl.com/publications/TR2024-059

Project page: https://merl.com/research/highlights/TI2V-Zero

2. "Long-Tailed Anomaly Detection with Learnable Class Names" by C.-H. Ho, K.-C. Peng, and N. Vasconcelos

This work aims to identify defects across various classes without relying on hard-coded class names. We introduce the concept of long-tailed anomaly detection, addressing challenges like class imbalance and dataset variability. Our proposed method combines reconstruction and semantic modules, learning pseudo-class names and utilizing a variational autoencoder for feature synthesis to improve performance in long-tailed datasets, outperforming existing methods in experiments.

Paper: https://www.merl.com/publications/TR2024-040

3. "Gear-NeRF: Free-Viewpoint Rendering and Tracking with Motion-aware Spatio-Temporal Sampling" by X. Liu, Y-W. Tai, C-T. Tang, P. Miraldo, S. Lohit, and M. Chatterjee

This work presents a new strategy for rendering dynamic scenes from novel viewpoints. Our approach is based on stratifying the scene into regions based on the extent of motion of the region, which is automatically determined. Regions with higher motion are permitted a denser spatio-temporal sampling strategy for more faithful rendering of the scene. Additionally, to the best of our knowledge, ours is the first work to enable tracking of objects in the scene from novel views - based on the preferences of a user, provided by a click.

Paper: https://www.merl.com/publications/TR2024-042

4. "SIRA: Scalable Inter-frame Relation and Association for Radar Perception" by R. Yataka, P. Wang, P. T. Boufounos, and R. Takahashi

Overcoming the limitations on radar feature extraction such as low spatial resolution, multipath reflection, and motion blurs, this paper proposes SIRA (Scalable Inter-frame Relation and Association) for scalable radar perception with two designs: 1) extended temporal relation, generalizing the existing temporal relation layer from two frames to multiple inter-frames with temporally regrouped window attention for scalability; and 2) motion consistency track with a pseudo-tracklet generated from observational data for better object association.

Paper: https://www.merl.com/publications/TR2024-041

5. "RILA: Reflective and Imaginative Language Agent for Zero-Shot Semantic Audio-Visual Navigation" by Z. Yang, J. Liu, P. Chen, A. Cherian, T. K. Marks, J. L. Roux, and C. Gan

We leverage Large Language Models (LLM) for zero-shot semantic audio visual navigation. Specifically, by employing multi-modal models to process sensory data, we instruct an LLM-based planner to actively explore the environment by adaptively evaluating and dismissing inaccurate perceptual descriptions.

Paper: https://www.merl.com/publications/TR2024-043

CVPR Workshop Papers:

1. "CoLa-SDF: Controllable Latent StyleSDF for Disentangled 3D Face Generation" by R. Dey, B. Egger, V. Boddeti, Y. Wang, and T. K. Marks

This paper proposes a new method for generating 3D faces and rendering them to images by combining the controllability of nonlinear 3DMMs with the high fidelity of implicit 3D GANs. Inspired by StyleSDF, our model uses a similar architecture but enforces the latent space to match the interpretable and physical parameters of the nonlinear 3D morphable model MOST-GAN.

Paper: https://www.merl.com/publications/TR2024-045

2. “Tracklet-based Explainable Video Anomaly Localization” by A. Singh, M. J. Jones, and E. Learned-Miller

This paper describes a new method for localizing anomalous activity in video of a scene given sample videos of normal activity from the same scene. The method is based on detecting and tracking objects in the scene and estimating high-level attributes of the objects such as their location, size, short-term trajectory and object class. These high-level attributes can then be used to detect unusual activity as well as to provide a human-understandable explanation for what is unusual about the activity.

Paper: https://www.merl.com/publications/TR2024-057

MERL co-organized workshops:

1. "Multimodal Algorithmic Reasoning Workshop" by A. Cherian, K-C. Peng, S. Lohit, M. Chatterjee, H. Zhou, K. Smith, T. K. Marks, J. Mathissen, and J. Tenenbaum

Workshop link: https://marworkshop.github.io/cvpr24/index.html

2. "The 5th Workshop on Fair, Data-Efficient, and Trusted Computer Vision" by K-C. Peng, et al.

Workshop link: https://fadetrcv.github.io/2024/

3. "SuperLoRA: Parameter-Efficient Unified Adaptation for Large Vision Models" by X. Chen, J. Liu, Y. Wang, P. Wang, M. Brand, G. Wang, and T. Koike-Akino

This paper proposes a generalized framework called SuperLoRA that unifies and extends different variants of low-rank adaptation (LoRA). Introducing new options with grouping, folding, shuffling, projection, and tensor decomposition, SuperLoRA offers high flexibility and demonstrates superior performance up to 10-fold gain in parameter efficiency for transfer learning tasks.

Paper: https://www.merl.com/publications/TR2024-062

- MERL researchers are presenting 5 conference papers, 3 workshop papers, and are co-organizing two workshops at the CVPR 2024 conference, which will be held in Seattle, June 17-21. CVPR is one of the most prestigious and competitive international conferences in computer vision. Details of MERL contributions are provided below.

MERL Publications

- , "Zero-Multiplier Sparse DNN Equalization for Fiber-Optic QAM Systems with Probabilistic Amplitude Shaping", European Conference on Optical Communication (ECOC), DOI: 10.1109/ECOC52684.2021.9605870, September 2021.BibTeX TR2021-110 PDF Presentation

- @inproceedings{Koike-Akino2021sep,

- author = {Koike-Akino, Toshiaki and Wang, Ye and Kojima, Keisuke and Parsons, Kieran and Yoshida, Tsuyoshi},

- title = {{Zero-Multiplier Sparse DNN Equalization for Fiber-Optic QAM Systems with Probabilistic Amplitude Shaping}},

- booktitle = {European Conference on Optical Communication (ECOC)},

- year = 2021,

- month = sep,

- publisher = {IEEE},

- doi = {10.1109/ECOC52684.2021.9605870},

- isbn = {978-1-6654-3868-1},

- url = {https://www.merl.com/publications/TR2021-110}

- }

- , "Joint Software-Hardware Design for Green AI", International Midwest Symposium on Circuits and Systems (MWSCAS), DOI: 10.1109/MWSCAS57524.2023.10405937, August 2023.BibTeX TR2023-096 PDF

- @inproceedings{Ahmed2023aug,

- author = {Ahmed, Md Rubel and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Joint Software-Hardware Design for Green AI}},

- booktitle = {International Midwest Symposium on Circuits and Systems (MWSCAS)},

- year = 2023,

- month = aug,

- publisher = {IEEE},

- doi = {10.1109/MWSCAS57524.2023.10405937},

- issn = {1558-3899},

- isbn = {979-8-3503-0210-3},

- url = {https://www.merl.com/publications/TR2023-096}

- }

- , "LoDA: Low-Dimensional Adaptation of Large Language Models", Advances in Neural Information Processing Systems (NeurIPS) workshop, December 2023.BibTeX TR2023-150 PDF

- @inproceedings{Liu2023dec,

- author = {Liu, Jing and Koike-Akino, Toshiaki and Wang, Pu and Brand, Matthew and Wang, Ye and Parsons, Kieran},

- title = {{LoDA: Low-Dimensional Adaptation of Large Language Models}},

- booktitle = {Advances in Neural Information Processing Systems (NeurIPS) workshop},

- year = 2023,

- month = dec,

- url = {https://www.merl.com/publications/TR2023-150}

- }

- , "SuperLoRA: Parameter-Efficient Unified Adaptation for Large Vision Models", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPRW63382.2024.00804, June 2024, pp. 8050-8055.BibTeX TR2024-062 PDF Presentation

- @inproceedings{Chen2024jun,

- author = {Chen, Xiangyu and Liu, Jing and Wang, Ye and Wang, Pu and Brand, Matthew and Wang, Guanghui and Koike-Akino, Toshiaki},

- title = {{SuperLoRA: Parameter-Efficient Unified Adaptation for Large Vision Models}},

- booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2024,

- pages = {8050--8055},

- month = jun,

- publisher = {IEEE},

- doi = {10.1109/CVPRW63382.2024.00804},

- url = {https://www.merl.com/publications/TR2024-062}

- }

- , "Quantum-PEFT: Ultra Parameter-Efficient Fine-Tuning", International Conference on Machine Learning (ICML), July 2024.BibTeX TR2024-101 PDF Presentation

- @inproceedings{Koike-Akino2024jul,

- author = {Koike-Akino, Toshiaki and Cevher, Volkan},

- title = {{Quantum-PEFT: Ultra Parameter-Efficient Fine-Tuning}},

- booktitle = {International Conference on Machine Learning (ICML)},

- year = 2024,

- month = jul,

- url = {https://www.merl.com/publications/TR2024-101}

- }

- , "Efficient Differentially Private Fine-Tuning of Diffusion Models", International Conference on Machine Learning (ICML) workshop (Next Generation of AI Safety), July 2024.BibTeX TR2024-104 PDF Presentation

- @inproceedings{Liu2024jul,

- author = {Liu, Jing and Lowy, Andrew and Koike-Akino, Toshiaki and Parsons, Kieran and Wang, Ye},

- title = {{Efficient Differentially Private Fine-Tuning of Diffusion Models}},

- booktitle = {International Conference on Machine Learning (ICML) workshop (Next Generation of AI Safety)},

- year = 2024,

- month = jul,

- url = {https://www.merl.com/publications/TR2024-104}

- }

- , "Hardware-Efficient Quantization for Green Custom Foundation Models", International Conference on Machine Learning (ICML), July 2024.BibTeX TR2024-105 PDF Presentation

- @inproceedings{Koike-Akino2024jul2,

- author = {Koike-Akino, Toshiaki and Meng Chang and Cevher, Volkan and De Micheli, Giovanni},

- title = {{Hardware-Efficient Quantization for Green Custom Foundation Models}},

- booktitle = {International Conference on Machine Learning (ICML)},

- year = 2024,

- month = jul,

- url = {https://www.merl.com/publications/TR2024-105}

- }

- , "SuperLoRA: Parameter-Efficient Unified Adaptation of Large Foundation Models", British Machine Vision Conference (BMVC), November 2024.BibTeX TR2024-156 PDF Presentation

- @inproceedings{Chen2024nov,

- author = {Chen, Xiangyu and Liu, Jing and Wang, Ye and Wang, Pu and Brand, Matthew and Wang, Guanghui and Koike-Akino, Toshiaki},

- title = {{SuperLoRA: Parameter-Efficient Unified Adaptation of Large Foundation Models}},

- booktitle = {British Machine Vision Conference (BMVC)},

- year = 2024,

- month = nov,

- publisher = {British Machine Vision Association},

- url = {https://www.merl.com/publications/TR2024-156}

- }

- , "Slaying the HyDRA: Parameter-Efficient Hyper Networks with Low-Displacement Rank Adaptation", Advances in Neural Information Processing Systems (NeurIPS), December 2024.BibTeX TR2024-157 PDF Presentation

- @inproceedings{Chen2024dec,

- author = {Chen, Xiangyu and Wang, Ye and Brand, Matthew and Wang, Pu and Liu, Jing and Koike-Akino, Toshiaki},

- title = {{Slaying the HyDRA: Parameter-Efficient Hyper Networks with Low-Displacement Rank Adaptation}},

- booktitle = {Workshop on Adaptive Foundation Models: Evolving AI for Personalized and Efficient Learning at Neural Information Processing Systems (NeurIPS)},

- year = 2024,

- month = dec,

- url = {https://www.merl.com/publications/TR2024-157}

- }