Computer Vision

Extracting meaning and building representations of visual objects and events in the world.

Our main research themes cover the areas of deep learning and artificial intelligence for object and action detection, classification and scene understanding, robotic vision and object manipulation, 3D processing and computational geometry, as well as simulation of physical systems to enhance machine learning systems.

Quick Links

-

Researchers

Anoop

Cherian

Tim K.

Marks

Michael J.

Jones

Chiori

Hori

Suhas

Lohit

Jonathan

Le Roux

Hassan

Mansour

Matthew

Brand

Siddarth

Jain

Moitreya

Chatterjee

Devesh K.

Jha

Radu

Corcodel

Diego

Romeres

Pedro

Miraldo

Kuan-Chuan

Peng

Ye

Wang

Petros T.

Boufounos

Anthony

Vetro

Daniel N.

Nikovski

Gordon

Wichern

Dehong

Liu

William S.

Yerazunis

Toshiaki

Koike-Akino

Arvind

Raghunathan

Avishai

Weiss

Stefano

Di Cairano

François

Germain

Abraham P.

Vinod

Yanting

Ma

Yoshiki

Masuyama

Philip V.

Orlik

Joshua

Rapp

Huifang

Sun

Pu

(Perry)

Wang

Yebin

Wang

Kenji

Inomata

Jing

Liu

Naoko

Sawada

Alexander

Schperberg

-

Awards

-

AWARD Best Paper - Honorable Mention Award at WACV 2021 Date: January 6, 2021

Awarded to: Rushil Anirudh, Suhas Lohit, Pavan Turaga

MERL Contact: Suhas Lohit

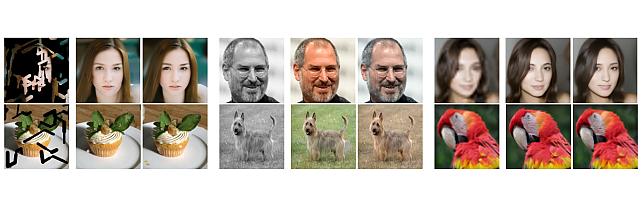

Research Areas: Computational Sensing, Computer Vision, Machine LearningBrief- A team of researchers from Mitsubishi Electric Research Laboratories (MERL), Lawrence Livermore National Laboratory (LLNL) and Arizona State University (ASU) received the Best Paper Honorable Mention Award at WACV 2021 for their paper "Generative Patch Priors for Practical Compressive Image Recovery".

The paper proposes a novel model of natural images as a composition of small patches which are obtained from a deep generative network. This is unlike prior approaches where the networks attempt to model image-level distributions and are unable to generalize outside training distributions. The key idea in this paper is that learning patch-level statistics is far easier. As the authors demonstrate, this model can then be used to efficiently solve challenging inverse problems in imaging such as compressive image recovery and inpainting even from very few measurements for diverse natural scenes.

- A team of researchers from Mitsubishi Electric Research Laboratories (MERL), Lawrence Livermore National Laboratory (LLNL) and Arizona State University (ASU) received the Best Paper Honorable Mention Award at WACV 2021 for their paper "Generative Patch Priors for Practical Compressive Image Recovery".

-

AWARD MERL Researchers win Best Paper Award at ICCV 2019 Workshop on Statistical Deep Learning in Computer Vision Date: October 27, 2019

Awarded to: Abhinav Kumar, Tim K. Marks, Wenxuan Mou, Chen Feng, Xiaoming Liu

MERL Contact: Tim K. Marks

Research Areas: Artificial Intelligence, Computer Vision, Machine LearningBrief- MERL researcher Tim Marks, former MERL interns Abhinav Kumar and Wenxuan Mou, and MERL consultants Professor Chen Feng (NYU) and Professor Xiaoming Liu (MSU) received the Best Oral Paper Award at the IEEE/CVF International Conference on Computer Vision (ICCV) 2019 Workshop on Statistical Deep Learning in Computer Vision (SDL-CV) held in Seoul, Korea. Their paper, entitled "UGLLI Face Alignment: Estimating Uncertainty with Gaussian Log-Likelihood Loss," describes a method which, given an image of a face, estimates not only the locations of facial landmarks but also the uncertainty of each landmark location estimate.

-

AWARD CVPR 2011 Longuet-Higgins Prize Date: June 25, 2011

Awarded to: Paul A. Viola and Michael J. Jones

Awarded for: "Rapid Object Detection using a Boosted Cascade of Simple Features"

Awarded by: Conference on Computer Vision and Pattern Recognition (CVPR)

MERL Contact: Michael J. Jones

Research Area: Machine LearningBrief- Paper from 10 years ago with the largest impact on the field: "Rapid Object Detection using a Boosted Cascade of Simple Features", originally published at Conference on Computer Vision and Pattern Recognition (CVPR 2001).

See All Awards for MERL -

-

News & Events

-

NEWS Suhas Lohit presents invited talk at Boston Symmetry Day 2025 Date: March 31, 2025

Where: Northeastern University, Boston, MA

MERL Contact: Suhas Lohit

Research Areas: Artificial Intelligence, Computer Vision, Machine LearningBrief- MERL researcher Suhas Lohit was an invited speaker at Boston Symmetry Day, held at Northeastern University. Boston Symmetry Day, an annual workshop organized by researchers at MIT and Northeastern, brought together attendees interested in symmetry-informed machine learning and its applications. Suhas' talk, titled “Efficiency for Equivariance, and Efficiency through Equivariance” discussed recent MERL works that show how to build general and efficient equivariant neural networks, and how equivariance can be utilized in self-supervised learning to yield improved 3D object detection. The abstract and slides can be found in the link below.

-

TALK [MERL Seminar Series 2025] Petar Veličković presents talk titled Amplifying Human Performance in Combinatorial Competitive Programming Date & Time: Wednesday, February 26, 2025; 11:00 AM

Speaker: Petar Veličković, Google DeepMind

MERL Host: Anoop Cherian

Research Areas: Artificial Intelligence, Computer Vision, Machine LearningAbstract Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming.

Recent years have seen a significant surge in complex AI systems for competitive programming, capable of performing at admirable levels against human competitors. While steady progress has been made, the highest percentiles still remain out of reach for these methods on standard competition platforms such as Codeforces. In this talk, I will describe and dive into our recent work, where we focussed on combinatorial competitive programming. In combinatorial challenges, the target is to find as-good-as-possible solutions to otherwise computationally intractable problems, over specific given inputs. We hypothesise that this scenario offers a unique testbed for human-AI synergy, as human programmers can write a backbone of a heuristic solution, after which AI can be used to optimise the scoring function used by the heuristic. We deploy our approach on previous iterations of Hash Code, a global team programming competition inspired by NP-hard software engineering problems at Google, and we leverage FunSearch to evolve our scoring functions. Our evolved solutions significantly improve the attained scores from their baseline, successfully breaking into the top percentile on all previous Hash Code online qualification rounds, and outperforming the top human teams on several. To the best of our knowledge, this is the first known AI-assisted top-tier result in competitive programming.

See All News & Events for Computer Vision -

-

Research Highlights

-

PS-NeuS: A Probability-guided Sampler for Neural Implicit Surface Rendering -

TI2V-Zero: Zero-Shot Image Conditioning for Text-to-Video Diffusion Models -

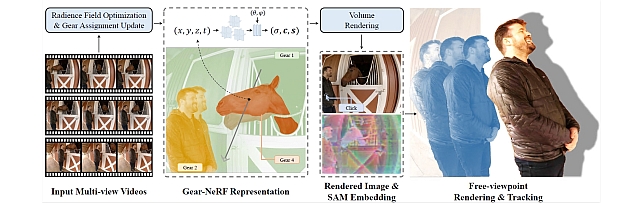

Gear-NeRF: Free-Viewpoint Rendering and Tracking with Motion-Aware Spatio-Temporal Sampling -

Steered Diffusion -

Robust Machine Learning -

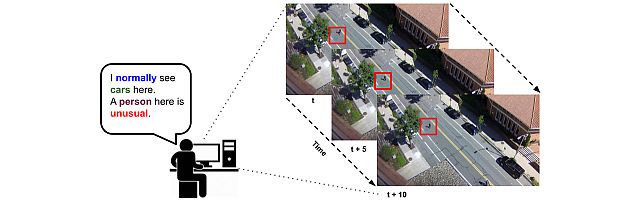

Video Anomaly Detection -

MERL Shopping Dataset -

Point-Plane SLAM

-

-

Internships

-

CV0060: Internship - Video Anomaly Detection

MERL is looking for a self-motivated intern to work on the problem of video anomaly detection. The intern will help to develop new ideas for improving the state of the art in detecting anomalous activity in videos. The ideal candidate would be a Ph.D. student with a strong background in machine learning and computer vision and some experience with video anomaly detection in particular. Proficiency in Python programming and Pytorch is necessary. The successful candidate is expected to have published at least one paper in a top-tier computer vision or machine learning venue, such as CVPR, ECCV, ICCV, WACV, ICML, ICLR, NeurIPS or AAAI. The intern will collaborate with MERL researchers to develop and test algorithms and prepare manuscripts for scientific publications. The internship is for 3 months and the start date is flexible.

Required Specific Experience

- Graduate student in Ph.D. program

- Experience with PyTorch.

- Prior publication in computer vision or machine learning conference/journal.

-

CV0063: Internship - Visual Simultaneous Localization and Mapping

MERL is looking for a self-motivated graduate student to work on Visual Simultaneous Localization and Mapping (V-SLAM). Based on the candidate’s interests, the intern can work on a variety of topics such as (but not limited to): camera pose estimation, feature detection and matching, visual-LiDAR data fusion, pose-graph optimization, loop closure detection, and image-based camera relocalization. The ideal candidate would be a PhD student with a strong background in 3D computer vision and good programming skills in C/C++ and/or Python. The candidate must have published at least one paper in a top-tier computer vision, machine learning, or robotics venue, such as CVPR, ECCV, ICCV, NeurIPS, ICRA, or IROS. The intern will collaborate with MERL researchers to derive and implement new algorithms for V-SLAM, conduct experiments, and report findings. A submission to a top-tier conference is expected. The duration of the internship and start date are flexible.

Required Specific Experience

- Experience with 3D Computer Vision and Simultaneous Localization & Mapping.

-

CA0129: Internship - LLM-guided Active SLAM for Mobile Robots

MERL is seeking interns passionate about robotics to contribute to the development of an Active Simultaneous Localization and Mapping (Active SLAM) framework guided by Large Language Models (LLM). The core objective is to achieve autonomous behavior for mobile robots. The methods will be implemented and evaluated in high performance simulators and (time-permitting) in actual robotic platforms, such as legged and wheeled robots. The expectation at the end of the internship is a publication at a top-tier robotic or computer vision conference and/or journal.

The internship has a flexible start date (Spring/Summer 2025), with a duration of 3-6 months depending on agreed scope and intermediate progress.

Required Specific Experience

- Current/Past Enrollment in a PhD Program in Computer Engineering, Computer Science, Electrical Engineering, Mechanical Engineering, or related field

- Experience with employing and fine-tuning LLM and/or Visual Language Models (VLM) for high-level context-aware planning and navigation

- 2+ years experience with 3D computer vision (e.g., point cloud, voxels, camera pose estimation) and mapping, filter-based methods (e.g., EKF), and in at least some of: motion planning algorithms, factor graphs, control, and optimization

- Excellent programming skills in Python and/or C/C++, with prior knowledge in ROS2 and high-fidelity simulators such as Gazebo, Isaac Lab, and/or Mujoco

Additional Desired Experience

- Prior experience with implementation and/or development of SLAM algorithms on robotic hardware, including acquisition, processing, and fusion of multimodal sensor data such as proprioceptive and exteroceptive sensors

See All Internships for Computer Vision -

-

Openings

See All Openings at MERL -

Recent Publications

- , "Programmatic Video Prediction Using Large Language Models", International Conference on Learning Representations Workshops (ICLRW), April 2025.BibTeX TR2025-049 PDF

- @inproceedings{Tang2025apr,

- author = {Tang, Hao and Ellis, Kevin and Lohit, Suhas and Jones, Michael J. and Chatterjee, Moitreya},

- title = {{Programmatic Video Prediction Using Large Language Models}},

- booktitle = {International Conference on Learning Representations Workshops (ICLRW)},

- year = 2025,

- month = apr,

- url = {https://www.merl.com/publications/TR2025-049}

- }

- , "Interactive Robot Action Replanning using Multimodal LLM Trained from Human Demonstration Videos", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), April 2025.BibTeX TR2025-034 PDF

- @inproceedings{Hori2025mar,

- author = {Hori, Chiori and Kambara, Motonari and Sugiura, Komei and Ota, Kei and Khurana, Sameer and Jain, Siddarth and Corcodel, Radu and Jha, Devesh K. and Romeres, Diego and {Le Roux}, Jonathan},

- title = {{Interactive Robot Action Replanning using Multimodal LLM Trained from Human Demonstration Videos}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2025,

- month = mar,

- url = {https://www.merl.com/publications/TR2025-034}

- }

- , "SurfR: Surface Reconstruction with Multi-scale Attention", International Conference on 3D Vision (3DV), March 2025.BibTeX TR2025-039 PDF Presentation

- @inproceedings{Ranade2025mar,

- author = {{{Ranade, Siddhant and Pais, Goncalo and Whitaker, Ross and Nascimento, Jacinto and Miraldo, Pedro and Ramalingam, Srikumar}}},

- title = {{{SurfR: Surface Reconstruction with Multi-scale Attention}}},

- booktitle = {International Conference on 3D Vision (3DV)},

- year = 2025,

- month = mar,

- url = {https://www.merl.com/publications/TR2025-039}

- }

- , "Towards Zero-shot 3D Anomaly Localization", IEEE Winter Conference on Applications of Computer Vision (WACV), Biswas, S. and Averbuch-Elor, H. and Štruc, V. and Yang, Y., Eds., DOI: 10.1109/WACV61041.2025.00148, February 2025, pp. 1447-1456.BibTeX TR2025-020 PDF Video Presentation

- @inproceedings{Wang2025feb2,

- author = {Wang, Yizhou and Peng, Kuan-Chuan and Fu, Raymond},

- title = {{Towards Zero-shot 3D Anomaly Localization}},

- booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

- year = 2025,

- editor = {Biswas, S. and Averbuch-Elor, H. and Štruc, V. and Yang, Y.},

- pages = {1447--1456},

- month = feb,

- publisher = {IEEE},

- doi = {10.1109/WACV61041.2025.00148},

- issn = {2642-9381},

- isbn = {979-8-3315-1083-1},

- url = {https://www.merl.com/publications/TR2025-020}

- }

- , "ComplexVAD: Detecting Interaction Anomalies in Video", IEEE Winter Conference on Applications of Computer Vision (WACV) Workshop, February 2025.BibTeX TR2025-016 PDF

- @inproceedings{Mumcu2025feb,

- author = {Mumcu, Furkan and Jones, Michael J. and Yilmaz, Yasin and Cherian, Anoop},

- title = {{ComplexVAD: Detecting Interaction Anomalies in Video}},

- booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV) Workshop},

- year = 2025,

- month = feb,

- url = {https://www.merl.com/publications/TR2025-016}

- }

- , "Rotation-Equivariant Neural Networks for Cloud Removal from Satellite Images", Asilomar Conference on Signals, Systems, and Computers (ACSSC), DOI: 10.1109/IEEECONF60004.2024.10942613, January 2025, pp. 1360-1365.BibTeX TR2025-009 PDF

- @inproceedings{Lohit2025jan,

- author = {Lohit, Suhas and Marks, Tim K.},

- title = {{Rotation-Equivariant Neural Networks for Cloud Removal from Satellite Images}},

- booktitle = {2024 58th Asilomar Conference on Signals, Systems, and Computers (ACSSC)},

- year = 2025,

- pages = {1360--1365},

- month = jan,

- publisher = {IEEE},

- doi = {10.1109/IEEECONF60004.2024.10942613},

- issn = {2576-2303},

- isbn = {979-8-3503-5405-8},

- url = {https://www.merl.com/publications/TR2025-009}

- }

- , "SoundLoc3D: Invisible 3D Sound Source Localization and Classification Using a Multimodal RGB-D Acoustic Camera", IEEE Winter Conference on Applications of Computer Vision (WACV), December 2024, pp. 5408-5418.BibTeX TR2025-003 PDF

- @inproceedings{He2024dec2,

- author = {He, Yuhang and Shin, Sangyun and Cherian, Anoop and Trigoni, Niki and Markham, Andrew},

- title = {{SoundLoc3D: Invisible 3D Sound Source Localization and Classification Using a Multimodal RGB-D Acoustic Camera}},

- booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

- year = 2024,

- pages = {5408--5418},

- month = dec,

- publisher = {CVF},

- url = {https://www.merl.com/publications/TR2025-003}

- }

- , "Temporally Grounding Instructional Diagrams in Unconstrained Videos", IEEE Winter Conference on Applications of Computer Vision (WACV), December 2024, pp. 8090-8100.BibTeX TR2025-002 PDF

- @inproceedings{Zhang2024dec,

- author = {Zhang, Jiahao and Zhang, Frederic and Rodriguez, Cristian and Ben-Shabat, Itzik and Cherian, Anoop and Gould, Stephen},

- title = {{Temporally Grounding Instructional Diagrams in Unconstrained Videos}},

- booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

- year = 2024,

- pages = {8090--8100},

- month = dec,

- publisher = {CVF},

- url = {https://www.merl.com/publications/TR2025-002}

- }

- , "Programmatic Video Prediction Using Large Language Models", International Conference on Learning Representations Workshops (ICLRW), April 2025.

-

Videos

-

Software & Data Downloads

-

ComplexVAD Dataset -

Gear Extensions of Neural Radiance Fields -

Long-Tailed Anomaly Detection Dataset -

Pixel-Grounded Prototypical Part Networks -

Steered Diffusion -

BAyesian Network for adaptive SAmple Consensus -

Robust Frame-to-Frame Camera Rotation Estimation in Crowded Scenes

-

Explainable Video Anomaly Localization -

Simple Multimodal Algorithmic Reasoning Task Dataset -

Partial Group Convolutional Neural Networks -

SOurce-free Cross-modal KnowledgE Transfer -

Audio-Visual-Language Embodied Navigation in 3D Environments -

3D MOrphable STyleGAN -

Instance Segmentation GAN -

Audio Visual Scene-Graph Segmentor -

Generalized One-class Discriminative Subspaces -

Generating Visual Dynamics from Sound and Context -

Adversarially-Contrastive Optimal Transport -

MotionNet -

Street Scene Dataset -

FoldingNet++ -

Landmarks’ Location, Uncertainty, and Visibility Likelihood -

Gradient-based Nikaido-Isoda -

Circular Maze Environment -

Discriminative Subspace Pooling -

Kernel Correlation Network -

Fast Resampling on Point Clouds via Graphs -

FoldingNet -

MERL Shopping Dataset -

Joint Geodesic Upsampling -

Plane Extraction using Agglomerative Clustering

-